Pose Estimation For Monocular Vision Cameras¶

Extrinsic Parameters¶

With the calibration parameters (namely, camera intrinsic parameters), by providing any type of calibration board as the reference, the camera posture relative to this calibration board should be able to be accurately estimated. If the calibration board’s position in the world frame is known, the camera’s extrinsic parameters (namely, the camera’s position relative to the world frame) can be further calculated.

ArUco markers are often used to estimate camera poses. Since we’re going to test on JeVois camera with its 120-degree fisheye lens again, a variety of 4x4_50 ArUco markers provided on JeVois Demo ArUco are recommended from us. You are also welcome to use other online applications to generate various types of ArUco markers, such as: ArUco markers generator.

Demonstration¶

Preparation¶

Dataset¶

In total, 371 images are captured consecutively and save on host PC, using jevois_streaming.py. 2 groups of images are carefully selected to demonstrate the feasibility of our implementation.

A consecutive sequence of images indexed from

015to026.A carefully selected bunch of images with arbitrary indexes.

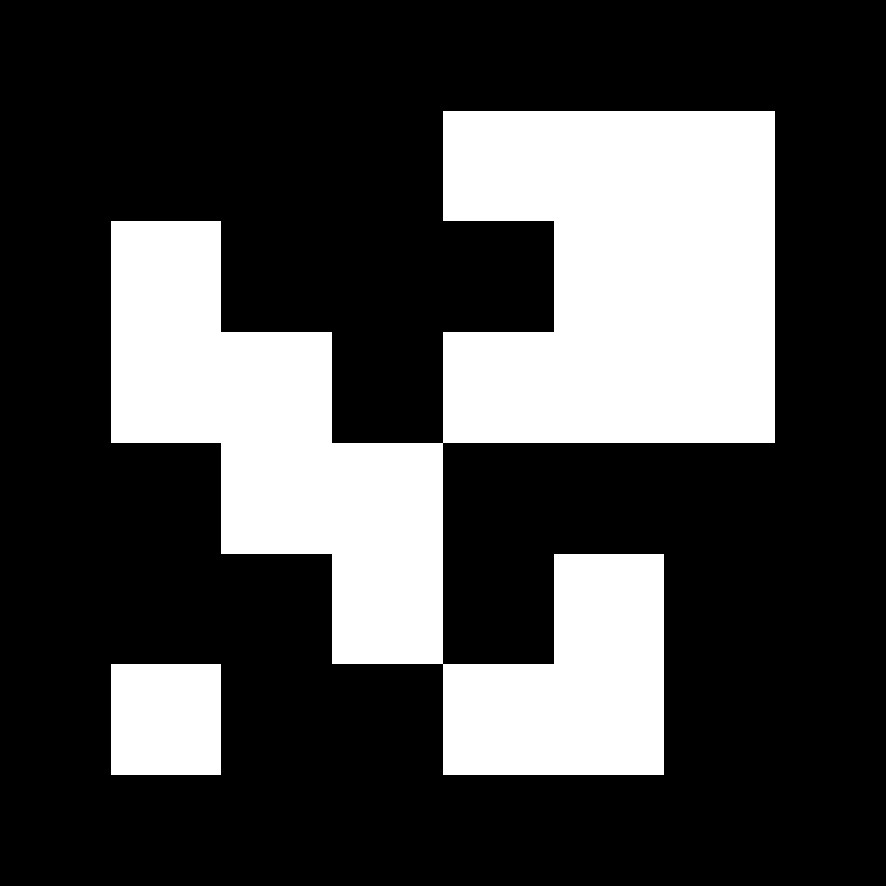

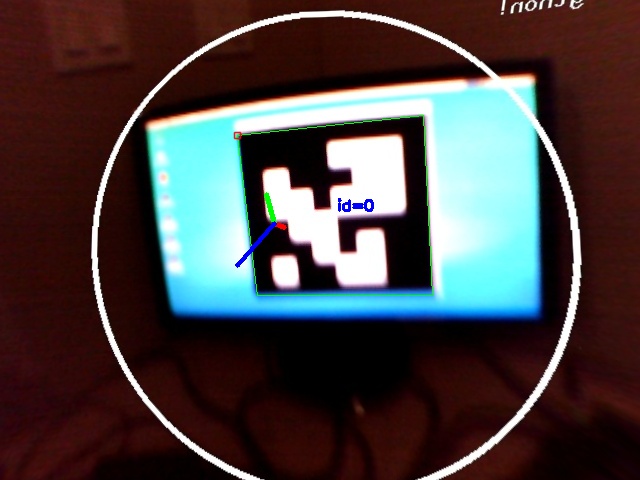

ArUco Marker 6x6_250-0¶

We use a single ArUco marker 6x6_250-0 in our experiments.

dictionary: 6x6

marker ID: 0

size: 250mm , which is generated from ArUco markers generator and displayed on an monitor at percentage 100%.

Code Snippet: posture_estimation.py¶

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 | ################################################################################

# #

# #

# IMPORTANT: READ BEFORE DOWNLOADING, COPYING AND USING. #

# #

# #

# Copyright [2017] [ShenZhen Longer Vision Technology], Licensed under #

# ******** GNU General Public License, version 3.0 (GPL-3.0) ******** #

# You are allowed to use this file, modify it, redistribute it, etc. #

# You are NOT allowed to use this file WITHOUT keeping the License. #

# #

# Longer Vision Technology is a startup located in Chinese Silicon Valley #

# NanShan, ShenZhen, China, (http://www.longervision.cn), which provides #

# the total solution to the area of Machine Vision & Computer Vision. #

# The founder Mr. Pei JIA has been advocating Open Source Software (OSS) #

# for over 12 years ever since he started his PhD's research in England. #

# #

# Longer Vision Blog is Longer Vision Technology's blog hosted on github #

# (http://longervision.github.io). Besides the published articles, a lot #

# more source code can be found at the organization's source code pool: #

# (https://github.com/LongerVision/OpenCV_Examples). #

# #

# For those who are interested in our blogs and source code, please do #

# NOT hesitate to comment on our blogs. Whenever you find any issue, #

# please do NOT hesitate to fire an issue on github. We'll try to reply #

# promptly. #

# #

# #

# Version: 0.0.1 #

# Author: JIA Pei #

# Contact: jiapei@longervision.com #

# URL: http://www.longervision.cn #

# Create Date: 2017-03-10 #

# Modified Date: 2020-01-18 #

################################################################################

# Standard imports

import sys

import os

import cv2

from cv2 import aruco

import numpy as np

# Load Calibrated Parameters

fs = cv2.FileStorage("calibration.yml", cv2.FILE_STORAGE_READ)

camera_matrix = fs.getNode("camera_matrix").mat()

dist_coeffs = fs.getNode("dist_coeff").mat()

fs.release()

image_size = (640, 480)

map1, map2 = cv2.fisheye.initUndistortRectifyMap(camera_matrix, dist_coeffs, np.eye(3), camera_matrix, image_size, cv2.CV_16SC2)

aruco_dict = aruco.Dictionary_get( aruco.DICT_6X6_250 )

markerLength = 250 # Here, our measurement unit is millimeters.

arucoParams = aruco.DetectorParameters_create()

# We first save a sequence of ArUco images from various directions.

imgDir = "../images/posture/original" # Specify the image directory

imgFileNames = [os.path.join(imgDir, fn) for fn in next(os.walk(imgDir))[2]]

imgFileNames.sort()

nbOfImgs = len(imgFileNames)

count = 0

for i in range(0, nbOfImgs):

img = cv2.imread(imgFileNames[i], cv2.IMREAD_COLOR)

# Enable the following 2 lines to save the original images.

# filename = "original" + str(i).zfill(3) +".jpg"

# cv2.imwrite(filename, img)

imgRemapped = cv2.remap(img, map1, map2, cv2.INTER_LINEAR, cv2.BORDER_CONSTANT) # for fisheye remapping

imgRemapped_gray = cv2.cvtColor(imgRemapped, cv2.COLOR_BGR2GRAY) # aruco.detectMarkers() requires gray image

filename = "remappedgray" + str(i).zfill(3) + ".jpg"

cv2.imwrite(filename, imgRemapped_gray)

corners, ids, rejectedImgPoints = aruco.detectMarkers(imgRemapped_gray, aruco_dict, parameters=arucoParams) # Detect aruco

if ids != None: # if aruco marker detected

rvec, tvec, trash = aruco.estimatePoseSingleMarkers(corners, markerLength, camera_matrix, dist_coeffs) # posture estimation from a single marker

imgWithAruco = aruco.drawDetectedMarkers(imgRemapped, corners, ids, (0,255,0))

imgWithAruco = aruco.drawAxis(imgWithAruco, camera_matrix, dist_coeffs, rvec, tvec, 100) # axis length 100 can be changed according to your requirement

filename = "posturedrawn" + str(count).zfill(3) + "_" + str(i).zfill(3) + ".jpg"

cv2.imwrite(filename, imgWithAruco)

count += 1

else: # if aruco marker is NOT detected

imgWithAruco = imgRemapped # assign imRemapped_color to imgWithAruco directly

cv2.imshow("aruco", imgWithAruco) # display

if cv2.waitKey(10) & 0xFF == ord('q'): # if 'q' is pressed, quit.

break

|

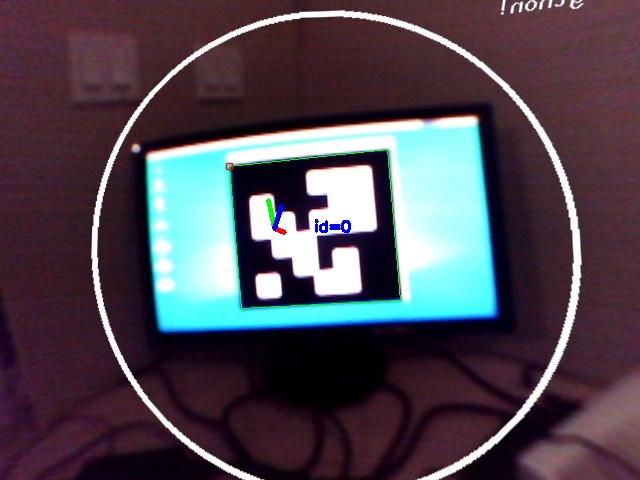

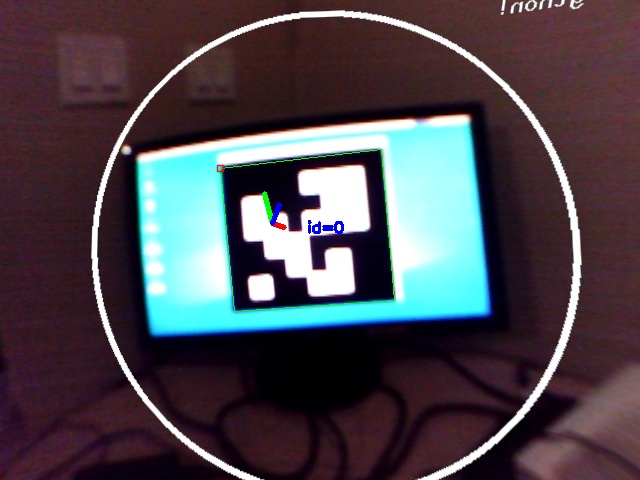

Demon 1: JeVois Fisheye Pose Estimation Based On ArUco Marker Detection¶

Intermediate Images: Undistorted Gray Images¶

Clearly, all 371 original images will get undistorted as the intermediate results.

Sequence 1: index from 51 to 62

|

|

|

|

|

|

|

|

|

|

|

|

Sequence 2:

indexes = 6 + 30*i

|

|

|

|

|

|

|

|

|

|

|

|

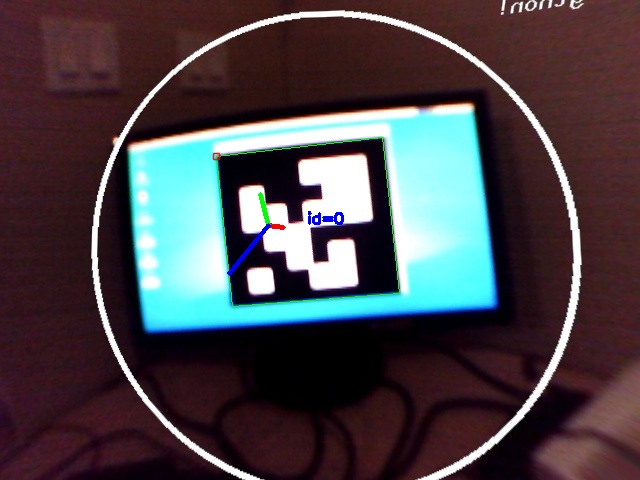

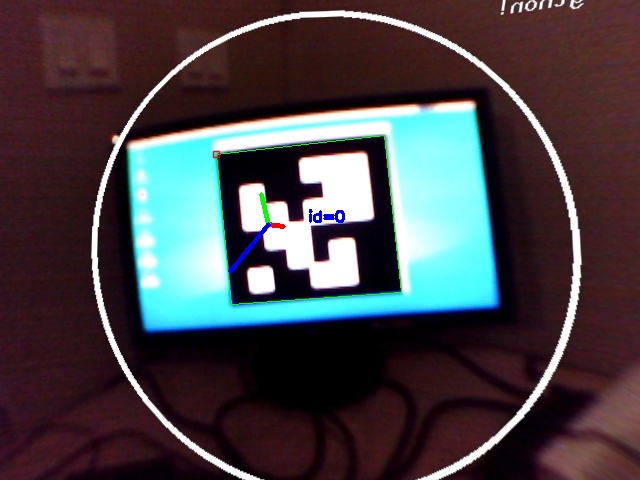

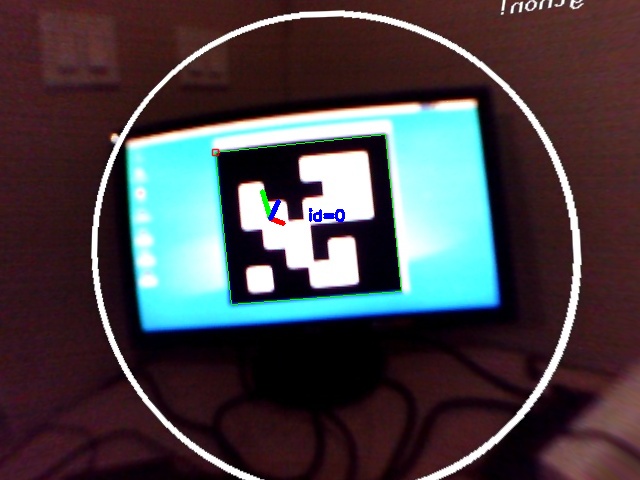

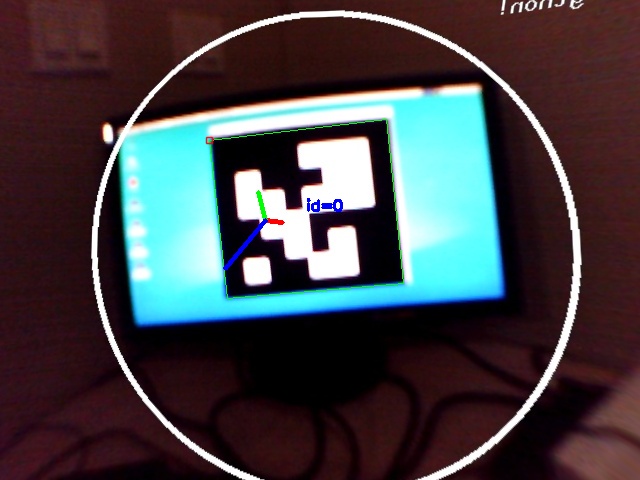

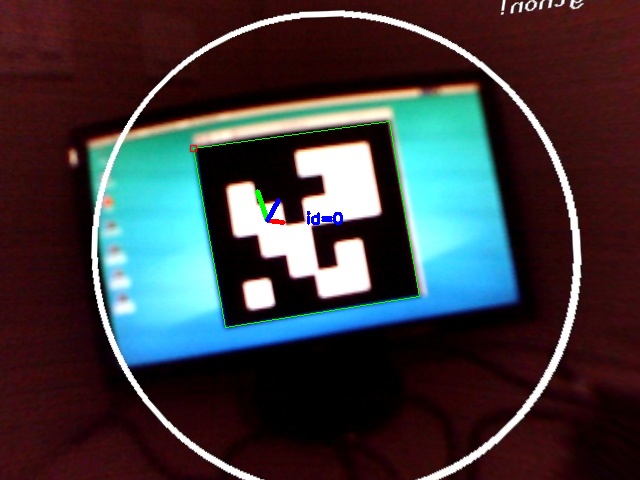

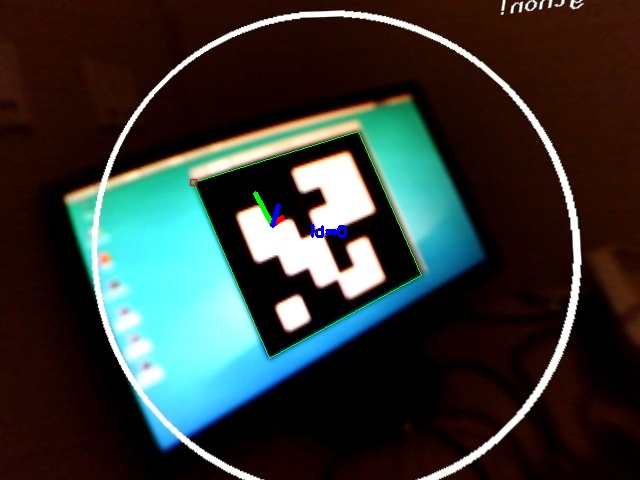

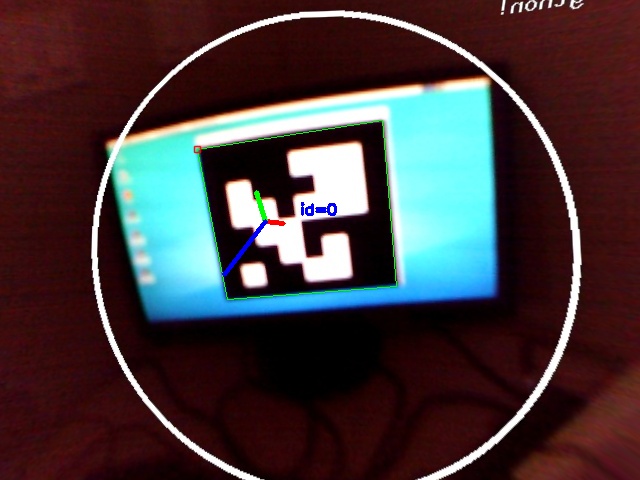

Results: Posture Axis Drawn¶

Among all 371 test images, only 284 camera postures have been successfully estimated. That is to say, among all 371 images, the ArUco marker has been successfully detected only in 284 images.

Sequence 1: index from 51 to 62

|

|

|

|

|

|

|

|

|

|

|

|

Sequence 2:

indexes = 6 + 30*i

|

|

|

|

|

|

|

|

|

|

|

|

Assignments¶

Please try to estimate camera posture using:

- a variety of markers:

A circle gird: Refer to my blog Camera Posture Estimation Using Circle Grid Pattern

An ChArUco diamond marker: Refer to my blog Camera Posture Estimation Using An ArUco Diamond Marker

An ArUco board: Refer to my blog Camera Posture Estimation Using An ArUco Board

A ChArUco board: Refer to my blog Camera Posture Estimation Using A ChArUco Board